- Many AI startups that secured large seed rounds are now struggling to advance to Series A.

- To survive, AI startups need to focus on the fundamentals that matter to investors.

- Pairing AI’s breakthroughs with real product-market fit is where the opportunity lies.

The generative AI boom of 2023 and 2024 felt like a gold rush. Excitement quickly turned to borderline frenzy, and every startup became an “AI something.” Now, as the initial euphoria fades, founders face a harsh reality — much of the AI frenzy is unsustainable without real substance. Investors and customers alike are growing more discerning. Avoiding the “AI bubble” means understanding where the technology truly stands, how the funding landscape has shifted, and what it takes to build an AI startup that can survive the hype cycle and secure lasting investment.

The evolving AI landscape

AI continues to evolve at breakneck speed, dominating headlines and investor discussions globally. Over the past year, we’ve seen significant advancements in model capabilities and the emergence of new categories like agentic AI systems and AI infrastructure optimization. What was cutting edge just months ago is quickly becoming outdated.

This evolution isn’t just about models — it's about the foundation they're built on. The hardware race has expanded beyond GPUs to include memory, compilers and chip-aware model design. While hardware-aware design isn’t new — early models like bidirectional encoder representations from transformers (BERT) were built with tensor processing units (TPUs) in mind — today’s scale demands tighter integration between hardware and software.

High-bandwidth memory (HBM) has become essential for AI workloads requiring faster data access and high throughput. HBM3E is currently the frontrunner, with manufacturers like SK Hynix and Micron leading the charge. But HBM comes with a steep price tag — much higher than DRAM — which is fueling research into cheaper alternatives like STT-MRAM and SanDisk’s HBF. However, this research remains in the early stages.

Compiler innovation is also seeing renewed focus. Compilers have long been key to maximizing hardware efficiency, but in AI, their importance has grown exponentially. Optimizing models to run faster and consume less energy has turned into a high-leverage investment. A well-optimized compiler improves speed and energy efficiency — but only if the underlying chip design supports it. History is littered with costly failures where inadequate or infeasible compiler support undermined the promise of otherwise powerful hardware.

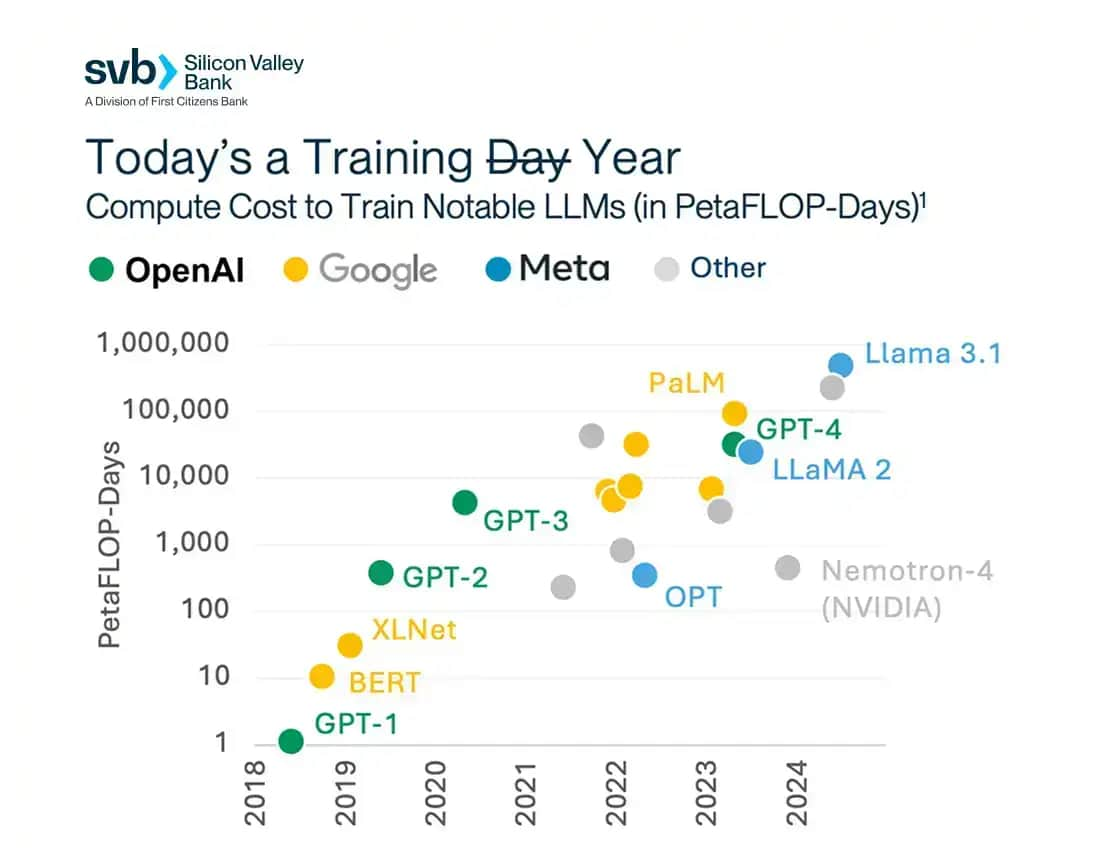

The cost of compute remains a major concern. Training models now routinely requires hundreds of millions of dollars — Meta’s LLaMA 3.1, for instance, reportedly took 1,200 PetaFLOP-years to train on over a trillion words (see the chart below).

1Compiled from company websites and SVB analysis.

As a result, demand for GPUs continues to outstrip supply, driving prices up and forcing companies to optimize aggressively. Compiler efficiency, model sparsity and quantization — which reduces the number of bits per model weight — are becoming critical for reducing cost without sacrificing performance. Nvidia’s support for new low-bit formats underscores this trend.

We’re also seeing innovation in hardware and accessibility. Groq’s LPU is purpose-built for fast, efficient inference, while DeepSeek Coder V2 shows how open-source models can push performance and reach. These developments reflect a shift in where the next wave of AI differentiation will happen — not just in capabilities, but in infrastructure and efficiency.

What’s happening to AI investment and the valuation bubble?

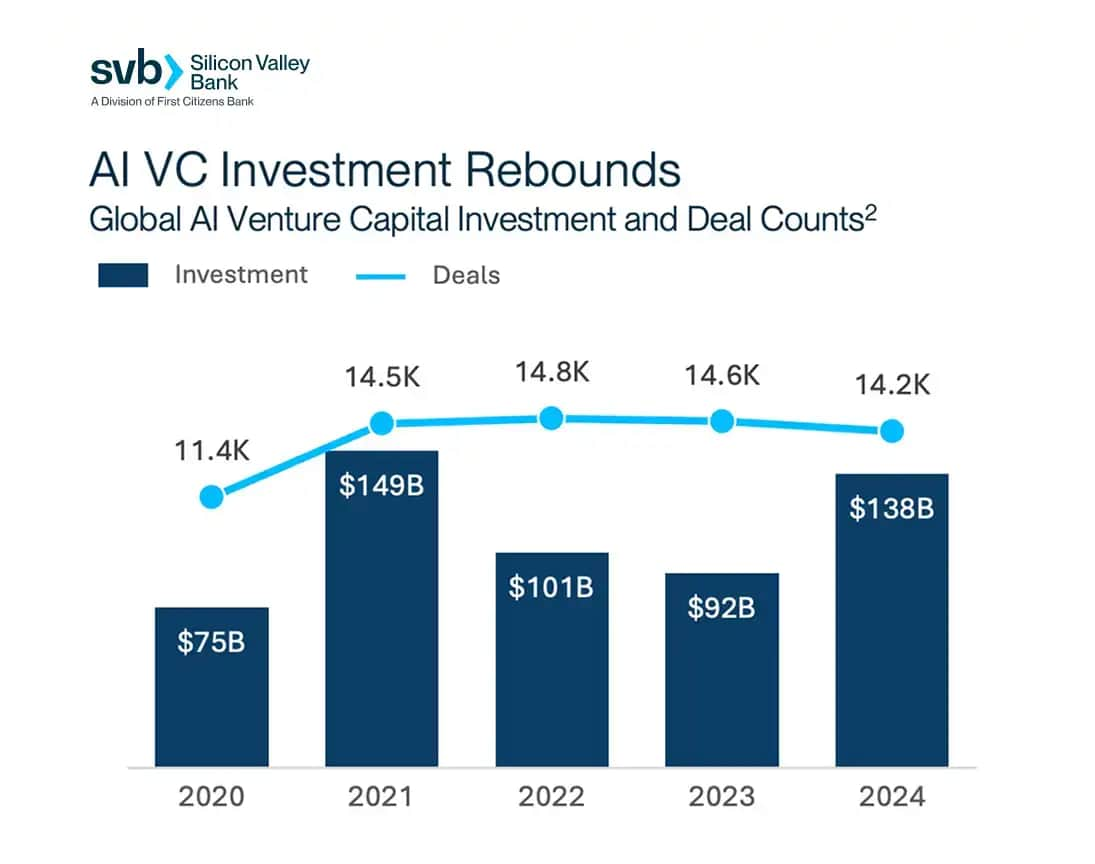

According to PitchBook, global venture capital investment into AI was nearly $140B in 2024, but investor appetite is becoming more tempered (see chart below). Many AI startups that secured large seed rounds are now struggling to advance to Series A, with graduation rates falling as investors demand clearer paths to sustainable business models. Valuations still reflect peak hype, but many companies are falling short and are unable to meet expectations at the growth stage.

2Data from Pitchbook Data, Inc. and SVB analysis.

The trajectory is starting to mirror past tech cycles like Web3: early excitement drives rapid, initial adoption and spikes in early spending, but many companies struggle to convert that into a lasting business. Revenue often fades as usage drops and the novelty wears off, leaving many struggling to prove product-market fit and maintain engagement. Investors are now recalibrating expectations, prioritizing companies with sustainable models and customer stickiness.

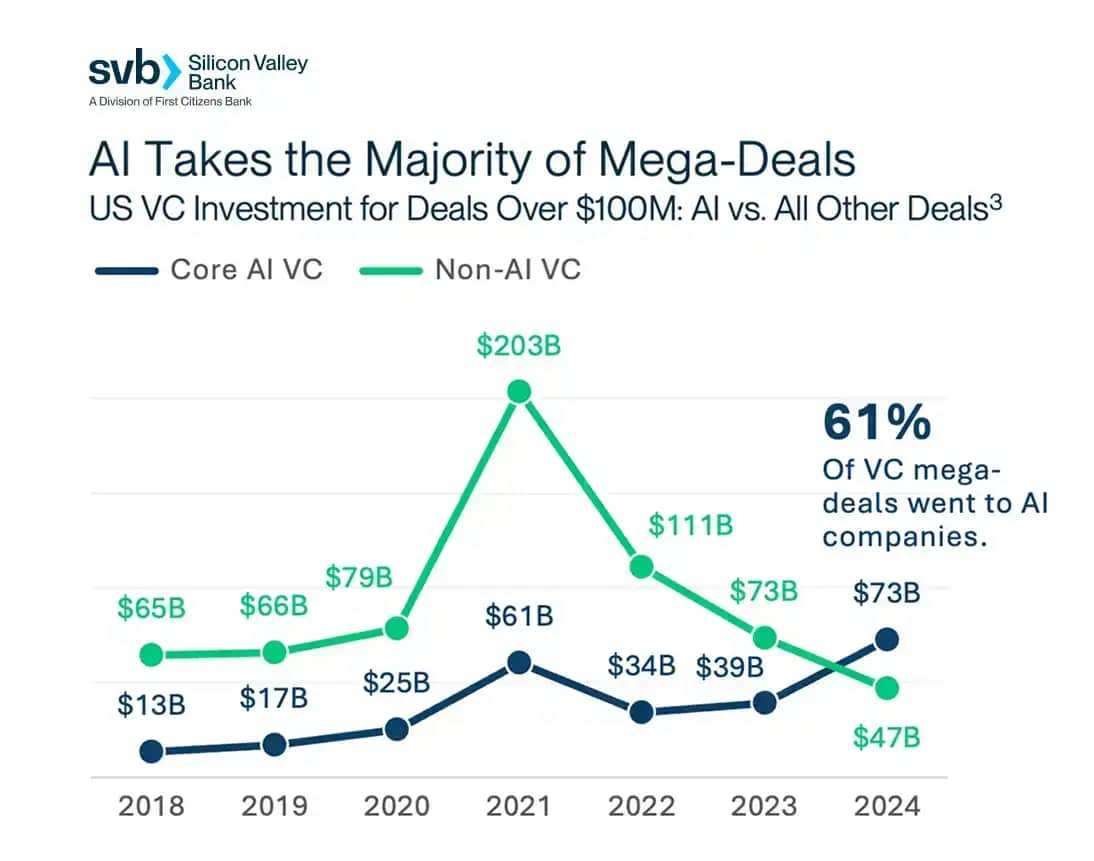

Despite this, AI is also now the defining force behind the broader venture recovery. AI companies received more mega-deal capital in 2024 ($73B) than non-AI companies ($47B) for the first time, marking a true shift in venture capital dynamics. AI accounted for 48% of all VC-backed companies and 61% of all mega-deal volume.

3Data from Pitchbook Data, Inc. and SVB analysis.

Why it’s harder than ever to build a long-lasting native AI company

Founders now face a tougher environment. The AI sector is deep into a hype cycle, and history suggests most startups born in such times won’t survive the inevitable market correction. Short-term user enthusiasm often fades, especially for agent-style AI tools and copilots, which experience rapid adoption followed by equally rapid drop-offs. As with past tech booms, companies built primarily on novelty risk disappearing once the excitement fades.

Meanwhile, the commoditization of models is accelerating. Open-source models and easy API access mean technical advantages disappear quickly unless paired with unique datasets or deep integration into customer workflows.

The global hardware arms race is compounding the challenge. Soaring compute and memory costs, combined with growing competition from China and India, are eroding technical moats faster than before.

How to build a long-lasting native AI company

To survive and thrive, native AI startups need to focus on five fundamentals that matter to investors now:

1. Go-to-market (GTM) execution

Strong GTM execution is a non-negotiable for native AI companies. Investors are looking for startups with clear sales pipelines, real customer traction and the ability to turn experiments into revenue.

Achieving this requires embedding AI tools directly into core customer workflows where churn is low and switching costs are high, driving adoption and defensibility.

Customer obsession and product-led growth are equally vital for building sustainable recurring revenue. From a GTM perspective, AI startups must be crystal clear about their ICP (ideal customer profile) and understand exactly how to sell into their buyers.

2. The intellectual property (IP) moat

In the age of generative AI, the idea of what makes technology defensible is changing. With widespread access to off-the-shelf models and APIs, many companies are building similar products using the same underlying technology. As a result, technical IP alone is no longer a strong moat.

Today, defensibility depends on proprietary datasets, domain expertise or new approaches that are hard for others to replicate. In regulated or specialized industries like healthcare or finance, access to clean, unique datasets can help train specialized models and offer companies a competitive edge.

A strong moat may also come from a data flywheel, where user interactions continuously improve the model for that specific use case, or from unique algorithms, deep customer integration or superior user experience.

3. Efficiency over compute

The old model of winning AI dominance through brute compute power is becoming unsustainable due to rising costs, energy demands and hardware shortages. Today, advantage lies in efficiency.

Compiler optimization, model sparsity and hardware-aware designs are helping companies maximize performance with limited compute resources and memory constraints, all while cutting costs. Native AI startups focusing on these areas will be better positioned to scale profitably and defensibly.

4. Positioning your brand and founder fit

In a crowded AI market, standing out requires more than just building with the latest tech, it requires clarity of purpose. The startups that stand out are led by founders with deep domain understanding, product intuition and an understanding of why their problem matters now. These founders build with purpose, and their brand reflects a clear, compelling narrative rooted in customer pain points.

5. Recurring revenue and retention

Outperformers will be companies with real customers, strong retention, and measurable value delivery. Recurring revenue models and high net dollar retention are critical proof points for AI companies. Founders must show that their AI product is mission critical, not just a novelty customers try once and abandon. Long-term value capture is what separates companies that last from those that fade.

To prove this, go beyond vanity metrics like monthly active users (MAUs). Instead, you should look at daily active users (DAUs), DAU/MAU ratios, cohort retention and renewal rates (especially for B2B).

The bottom line

The froth of speculative funding and one-off chatbot apps will inevitably settle. But within that cohort are the seeds of truly transformative companies that could define the next decade of technology. The opportunity is enormous for those who can pair AI’s breakthroughs with real product-market fit and solid business fundamentals.

As the market matures, areas like physical AI, compiler innovation and compute efficiency will only grow in importance. Success won’t go to those who are first to launch but to those who can build sustainably and defensibly.

If past cycles have taught us anything, it’s that the fundamentals still hold: manage burn wisely, find true product-market fit and constant iteration. In the end, durability matters more than noise.

Acknowledgement

Thank you to Nuno Lopes of Furiosa AI, Amy Wu Martin of Menlo Ventures, Andy Weissman of Union Square Ventures and Tanay Jaipuria of Wing VC for sharing their expertise and providing helpful insights for this article.